Key Takeaways

- Building AI in healthcare almost always runs into the same barrier: too little usable data, which makes synthetic training data, augmentation, and transfer learning essential tools.

- Synthetic data can extend datasets and protect privacy, but without rigorous validation it risks producing artifacts that mislead models instead of improving them.

- Data augmentation adds variety and balance to small datasets, while transfer learning adapts existing models to medical tasks and shortens development cycles.

- Validation across statistical checks, expert review, performance metrics, and privacy safeguards is what separates experiments that stay in the lab from systems that work in real hospitals.

- The future of medical AI will not be decided by who has the most data, but by who makes the smartest use of the data they have.

Is Your HealthTech Product Built for Success in Digital Health?

.avif)

Every attempt to build medical AI runs into the same obstacle sooner or later: there is never enough data.

Building high-quality machine learning models in healthcare is often hindered by the limited availability of large, well-annotated datasets. Data privacy regulations, the high cost of expert labeling, and the rarity of certain medical conditions all contribute to this scarcity. The result is small, imbalanced, or incomplete datasets. These limitations can lead to overfitting, poor generalization, and unreliable performance in real-world clinical settings.

To work around these constraints, researchers and practitioners increasingly turn to three broad strategies: synthetic data generation, augmentation, and transfer learning. Each tackles the scarcity problem from a different angle.

In this blog post, we’ll break down how to work with limited data in healthcare AI. You’ll see how synthetic data can be generated, how augmentation expands small datasets, and how transfer learning adapts existing models to medical tasks. We’ll also cover validation, ethics, and hands-on advice to help you decide when synthetic training data is the right path. Read on for a practical guide to building AI when real data is scarce.

If you're looking to build AI in healthcare but are struggling with limited data, you can also talk to our team about how to validate synthetic training data for real-world use.

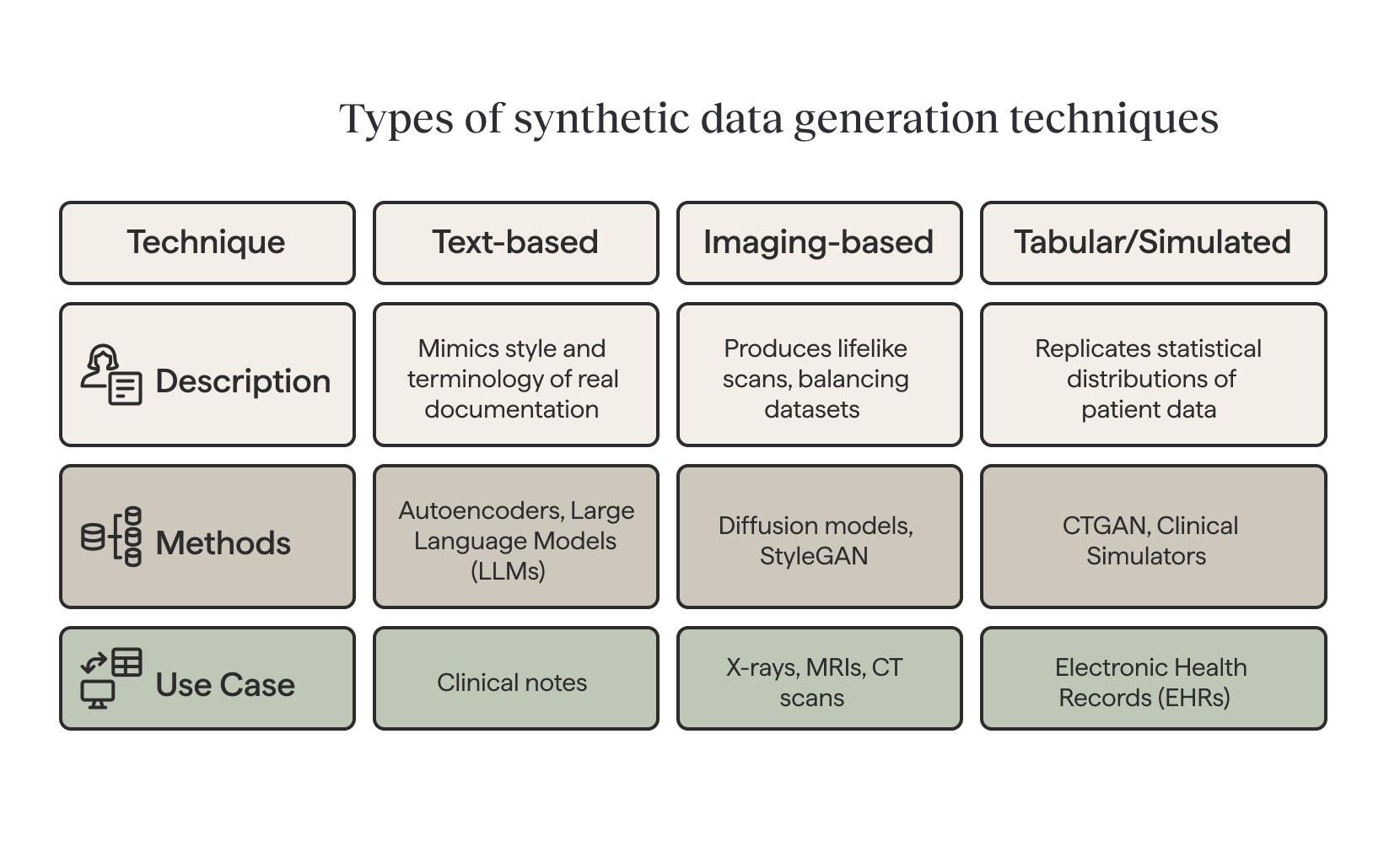

Synthetic data generation techniques

Imagine trying to train a medical AI system to detect rare diseases, but you only have a handful of patient cases. This is where synthetic data becomes a game-changer. By creating realistic, artificial records that reflect genuine medical patterns, researchers can fill gaps and strengthen model performance without relying solely on scarce or sensitive patient data.

In healthcare, synthetic patient data can be generated to train diagnostic models while respecting patient confidentiality.

Text-based generation

Different types of data call for different approaches. For clinical text, autoencoder-based methods and large language models (LLMs) can generate synthetic notes that mimic the style and terminology of real documentation. Variational autoencoders (VAEs) create new data by compressing input data into a lower-dimensional representation.

Imaging-based generation

For medical imaging, advanced generative models such as diffusion models and StyleGAN can produce lifelike X-rays, MRIs, or CT scans—balancing datasets that would otherwise be skewed toward common conditions.

For medical imaging, advanced generative models such as diffusion models and StyleGAN can produce lifelike X-rays, MRIs, or CT scans—balancing datasets that would otherwise be skewed toward common conditions. Synthetic data helps to balance datasets that are imbalanced by generating additional examples of rare events, improving model performance.

Tabular and simulated data

In structured datasets like electronic health records (EHRs), techniques such as CTGAN can replicate statistical distributions of patient data. Conditional sampling allows for targeted cohorts, for example, generating synthetic data for diabetic patients over 60. Beyond purely generative methods, clinical simulators (from physiological heart and lung models to synthetic EHR generators) offer controllable, high-fidelity datasets for training and testing AI systems. It can take various forms, including text, numbers, tables, images, and videos.

A study found that GPT-4o, in zero-shot prompting, produced 6,166 synthetic case records. In Phase 2, 92.3 % of the 13 clinical parameters showed no statistically significant difference versus real data, i.e. fidelity. (source)

Of course, synthetic data is most powerful when used to augment, not replace, real-world data. Careful validation is essential to ensure the generated records improve robustness without introducing artifacts or hidden biases.

Data augmentation strategies

Data augmentation is often the first line of defense against limited training data in healthcare AI. It creates variations that improve generalization without requiring new samples. These techniques can also generate new data to supplement existing datasets, helping to enhance model performance.

Geometric and photometric adjustments

In the case of medical images, geometric transformations must respect anatomical constraints. For instance, rotations stay within ±15–30° for chest X-rays but can extend to ±180° for dermoscopy. Horizontal flipping requires caution when dealing with asymmetric organs like the heart. Photometric adjustments simulate acquisition differences through contrast jittering, brightness shifts, or Gaussian noise. CT and MRI scans, meanwhile, benefit from specialized window and level perturbations.

Advanced techniques such as elastic deformation and StyleGAN2-ADA can generate realistic pathological variations. They are most effective with 5,000+ medical images but can also work through fine-tuning on smaller datasets. Generated samples, however, always require clinical validation before training use.

Textual augmentation methods

By contrast, text augmentation has to preserve medical meaning while adding linguistic variety. Synonym replacement can rely on UMLS APIs for terminology and BioBERT embeddings for context. This ensures that critical entities—such as ICD-10 codes, drug names, or lab values—remain intact.

Back-translation through intermediate languages (EN→DE→EN for conservative, EN→ZH→EN for more aggressive shifts), filtered by high BLEU scores, ensures quality. LLM-based paraphrasing with carefully tuned parameters (temperature 0.3–0.7) also works when prompts explicitly preserve clinical entities, measurements, and timelines.

Time-series and signal augmentation

When it comes to tabular and time-series data, methods must maintain both statistical integrity and physiological validity. SMOTE addresses class imbalance, while controlled noise injection and feature permutation create safe variations.

Signal augmentation for ECG, EEG, and other physiological data may involve time warping, amplitude scaling, or frequency-dependent jittering via FFT. Specialized GANs, such as ECG-GAN, can even generate entire synthetic signals conditioned on patient metadata.

In one GAN-based liver lesion classification task (only 182 CT image ROIs), classical augmentation yielded 78.6 % sensitivity and 88.4 % specificity; adding synthetic data improved to 85.7 % sensitivity and 92.4 % specificity. (source)

These strategies collectively enable robust model training, even when annotated data remains scarce. Still, every augmented dataset requires validation to avoid introducing clinically meaningless patterns.

{{lead-magnet}}

Transfer learning approaches

Even with synthetic data and augmentation, many healthcare AI projects face limitations in sample size. Transfer learning helps bridge this gap by reusing knowledge from models trained on large datasets and adapting it to medical tasks.

A common strategy is to fine-tune a pretrained model on a smaller dataset while freezing the earlier layers. This allows the system to retain broad features while adjusting to domain-specific details.

In imaging, pretrained models provide a foundation for recognizing anatomical structures and disease patterns with fewer labeled examples. In clinical text, models trained on biomedical corpora bring an understanding of medical terminology, improving tasks such as diagnosis classification, information extraction, and risk prediction.

In practice, this means a hospital team can fine-tune a general model on a handful of local patient scans or notes—achieving clinically useful performance much faster than training from scratch.

Beyond supervised transfer, self-supervised learning on large volumes of unlabeled medical data is gaining momentum. These models learn general representations from raw text, images, or signals and then adapt to smaller labeled datasets. The result is improved efficiency and performance, even when annotations are limited.

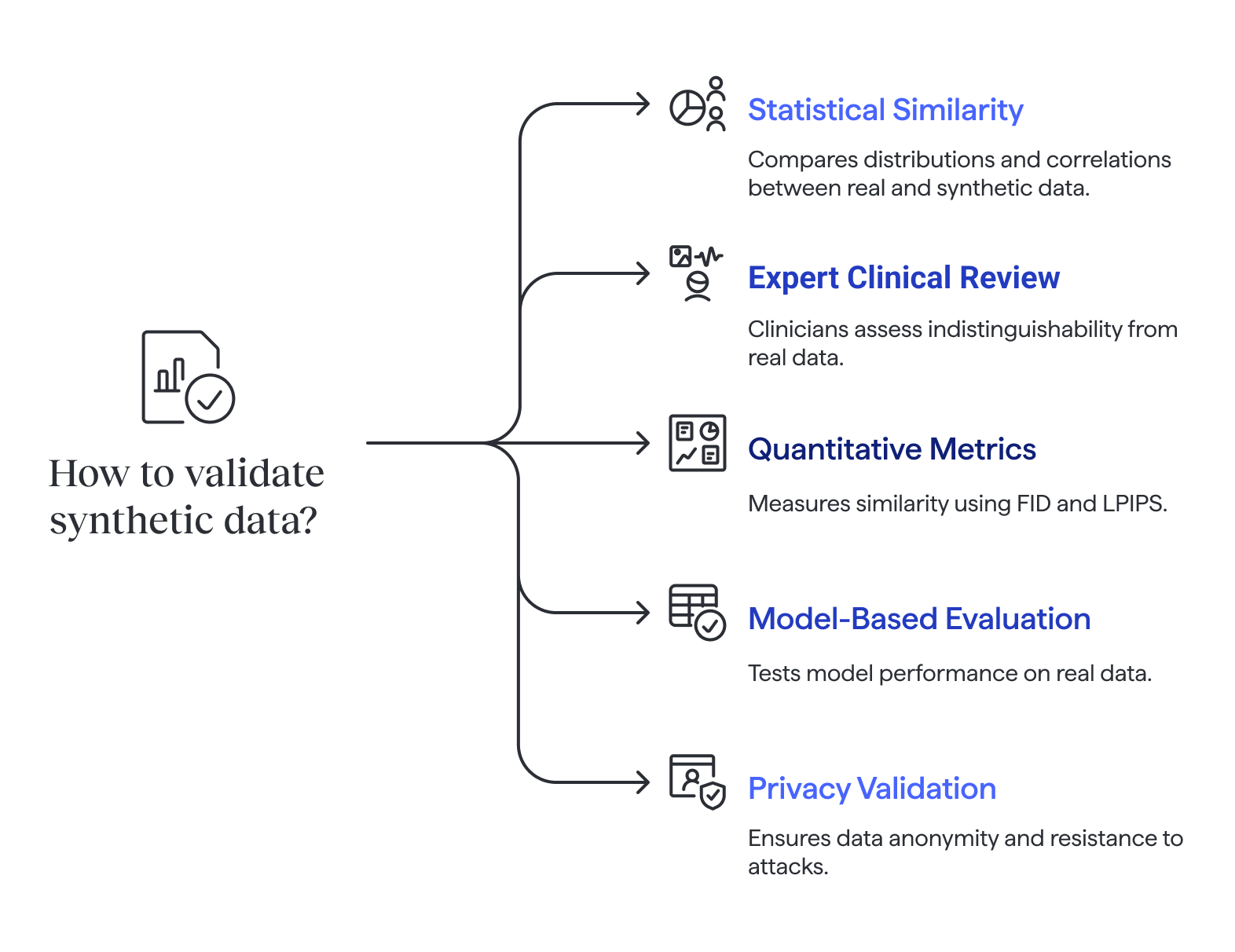

Validation methods

Of course, synthetic data is only valuable if it reflects real-world patterns and maintains patient privacy. That’s why validation must be multi-layered. Validation ensures that synthetic data does not contain the same information as the original data, protecting privacy while preserving the utility needed for analysis.

Statistical similarity checks

Statistical checks compare distributions, correlations, and other key properties between real and synthetic data.

Yet even with these checks, studies show that synthetic datasets often fail to preserve multi-signal correlations—for example, relationships between different physiological signals—which can distort downstream models if overlooked.

%201.png)

Expert clinical review

Expert review adds another layer: clinicians or radiologists can perform blinded assessments, evaluating whether records or images are indistinguishable from real data. In some cases, experts are asked in Turing-test style experiments to tell real from synthetic. Difficulty in making the distinction suggests realism.

Quantitative performance metrics

Quantitative metrics complement human judgment. These metrics are used to assess the quality of data generated by synthetic data methods, ensuring that computationally produced samples closely resemble real-world data. For images, FID (Fréchet Inception Distance) measures similarity between distributions of real and synthetic samples, while LPIPS (Learned Perceptual Image Patch Similarity) reflects human-like perceptual similarity. Together, expert inspection and quantitative scores provide strong evidence of quality.

Model-based evaluation

Model-based evaluation goes further. A model trained on synthetic data is tested against a held-out real dataset to measure metrics such as accuracy, F1 score, or ROC-AUC. Synthetic data is often used to train models when real data is limited, sensitive, or subject to privacy regulations. This confirms whether synthetic data captures clinically relevant patterns. Additional checks may simulate robustness by testing on noisy labels, varied patient populations, or external hospital data.

Privacy and re-identification risks

Finally, privacy validation is critical. Tests include k-anonymity to ensure no single record can be re-identified, as well as resistance to membership inference attacks. Pseudonymized data replaces sensitive data with artificial identifiers, but may still contain real world information that requires protection under privacy laws. Researchers may also check whether sensitive attributes could be inferred from synthetic datasets. These steps ensure safe use without exposing patient information.

Ethical and regulatory considerations

Using synthetic data in healthcare AI requires meeting strict ethical and regulatory standards. HIPAA and GDPR compliance are essential, and each synthetic record should be tested to confirm it cannot be re-identified. Fully synthetic data contains no real patient information, while partially synthetic data combines real and artificial elements to balance privacy and utility. Membership inference resistance, as well as traceable documentation of the generation pipeline, supports both safety and regulatory readiness.

Synthetic data can augment training and testing but is rarely sufficient for regulatory approval. FDA- and CE-marked medical devices still demand validation against real clinical datasets. Synthetic data can also be used to supplement publicly available data, and organizations operating in the European Union must comply with strict data protection regulations. Privacy-preserving methods, such as DP-GANs or gradient clipping, can be integrated to further reduce risk.

Taken together, rigorous validation, transparency, and privacy-focused techniques make it possible to use synthetic data responsibly to improve model robustness while respecting ethical and legal boundaries.

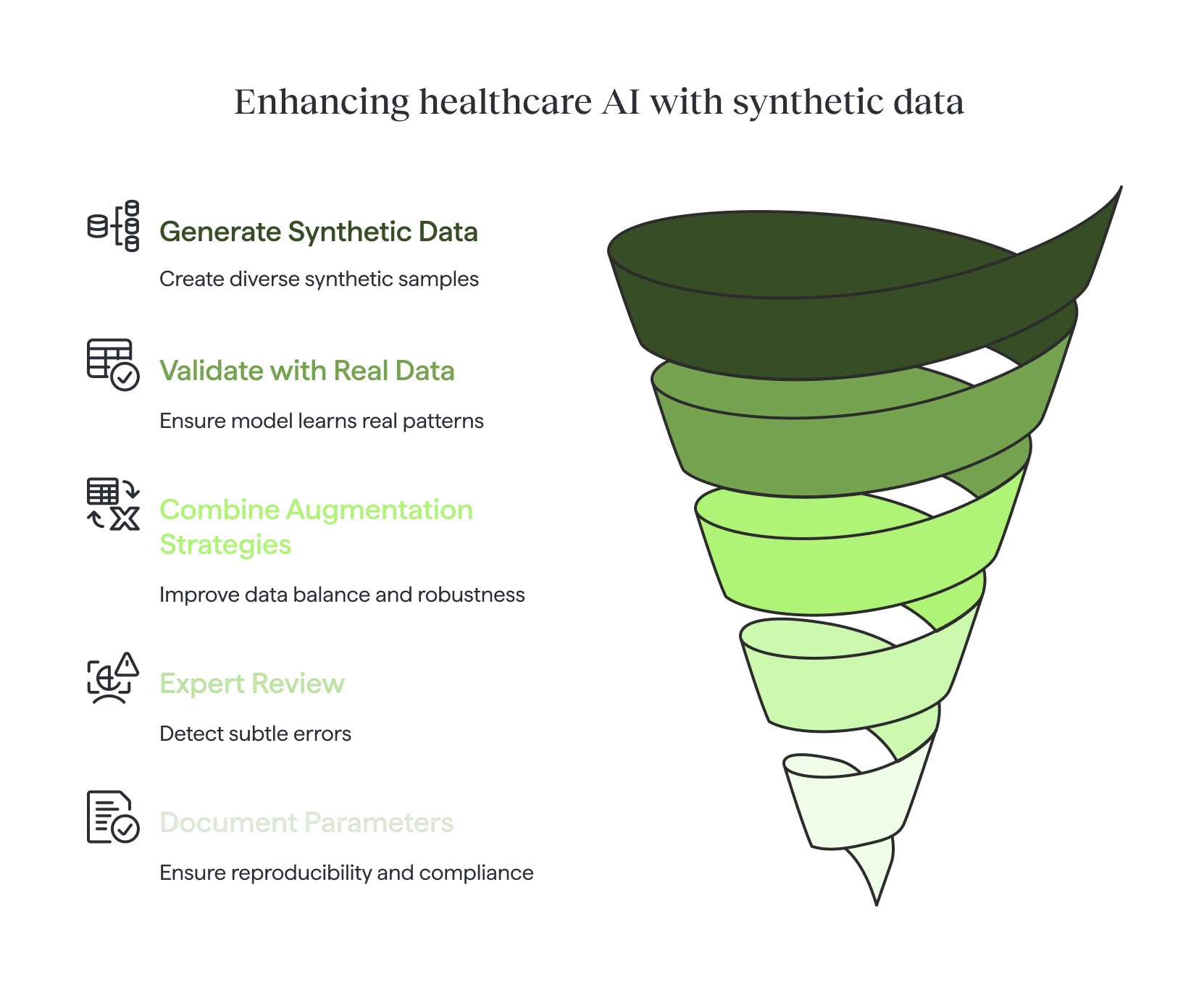

Practical advice checklist

When working with limited datasets, synthetic data and augmentation can be powerful tools—but only when applied responsibly.

- Validate on a real, held-out dataset. Synthetic data can expand diversity, but only real patient records confirm whether the model has learned meaningful patterns.

- Avoid training exclusively on synthetic data. Synthetic samples should complement, not replace, real-world examples.

- Combine multiple augmentation strategies. Especially for rare classes, blending geometric, photometric, and generative methods improves balance and robustness.

- Include expert review where possible. Clinicians can detect subtle errors that automated checks may miss.

- Document all generation parameters. Clear records of algorithms, seeds, and settings ensure reproducibility and regulatory readiness.

Conclusion

Working with limited datasets is the rule rather than the exception in healthcare AI. Synthetic generation, careful augmentation, and transfer learning can bridge critical gaps, but only when paired with rigorous validation and ethical safeguards.

Used responsibly, these methods don’t just help researchers overcome scarcity. More importantly, they allow medical AI systems to move closer to real clinical impact, even in the absence of big data. Data may be scarce, but ingenuity isn’t—and that is what will shape the future of medical AI.

In the end, the future of medical AI will belong not to those with the most data, but to those who know how to make the most of the data they have.

Frequently Asked Questions

Synthetic training data is artificially generated medical data created through computational methods that mimics the statistical properties and patterns of real patient data. In healthcare AI, synthetic data is generated using advanced techniques like generative adversarial networks (GANs), variational autoencoders (VAEs), diffusion models, and large language models to create realistic patient records, medical images, clinical notes, and electronic health records without using actual patient information. This approach enables healthcare organizations to train machine learning models while overcoming data scarcity challenges and maintaining strict privacy compliance with regulations like HIPAA and GDPR.

Healthcare AI development faces a persistent challenge: insufficient training data. Several factors contribute to this scarcity, including strict data privacy regulations, the high cost of expert medical annotation, the rarity of certain diseases and conditions, and the complexity of obtaining diverse patient datasets. Real-world medical datasets are often small, imbalanced, or incomplete, leading to AI models that overfit and fail to generalize to clinical settings. Synthetic data generation addresses these limitations by artificially expanding datasets, balancing rare conditions, and protecting patient privacy while providing the volume and variety needed to build robust, clinically reliable AI systems.

Synthetic data generation and data augmentation are complementary but distinct approaches to addressing limited training data. Data augmentation creates variations of existing real data through transformations like rotations, flips, noise injection, and contrast adjustments for medical images, or synonym replacement and paraphrasing for clinical text. These techniques expand datasets while staying grounded in actual patient records. Synthetic data generation, by contrast, creates entirely new artificial samples using generative models that learn underlying patterns from real data. While augmentation modifies what exists, synthetic generation produces novel records that never existed. Both strategies work best when combined: augmentation adds variety to real data, while synthetic generation fills gaps and balances rare classes.

The most effective techniques for generating synthetic medical images include diffusion models, StyleGAN2-ADA, and conditional GANs, each suited to different imaging modalities and use cases. Diffusion models excel at producing high-fidelity X-rays, CT scans, and MRIs by gradually denoising random inputs to create realistic medical images. StyleGAN2-ADA generates photorealistic dermoscopy images and pathology slides, particularly effective when fine-tuned on smaller datasets of 5,000 plus images. Conditional GANs allow targeted generation based on specific attributes, such as disease type, patient demographics, or anatomical region. Advanced augmentation techniques like elastic deformation can simulate realistic pathological variations. All synthetic medical images require clinical validation by radiologists or specialists to ensure anatomical accuracy and diagnostic relevance before use in training AI models.

No, synthetic data should augment rather than replace real patient data when training healthcare AI systems. While synthetic data effectively expands datasets and protects privacy, it cannot fully capture the complexity, variability, and unpredictable patterns found in real clinical environments. Regulatory agencies like the FDA and CE marking authorities require validation against real clinical datasets for medical device approval. Models trained exclusively on synthetic data risk learning artificial patterns or biases introduced during generation, leading to poor performance on actual patients. Best practice involves combining synthetic data with real patient records, using synthetic samples to balance rare conditions, extend limited datasets, and improve model robustness, while always validating performance on held-out real-world data to confirm clinical utility and safety.

Validating synthetic training data requires a multi-layered approach combining statistical, clinical, performance-based, and privacy assessments. Statistical validation includes distribution comparison tests, correlation analysis, and verification that synthetic data preserves key properties of real data without exposing patient information. Expert clinical review involves blinded assessments where healthcare professionals evaluate whether synthetic records or images are distinguishable from real data. Model-based evaluation trains AI systems on synthetic data and tests them against held-out real patient datasets, measuring accuracy, sensitivity, specificity, and ROC-AUC scores. Quantitative image metrics like Fréchet Inception Distance and Learned Perceptual Image Patch Similarity assess realism. Privacy validation confirms k-anonymity, resistance to membership inference attacks, and protection against re-identification risks. This comprehensive validation framework ensures synthetic data improves model performance without introducing artifacts or compromising patient privacy.

Transfer learning is a machine learning approach that reuses knowledge from models pretrained on large datasets and adapts them to specific medical tasks with limited data. Instead of training healthcare AI models from scratch, which requires massive annotated datasets, transfer learning starts with a foundation model trained on millions of general images or text documents, then fine-tunes it on smaller medical datasets. For medical imaging, pretrained models like ResNet or Vision Transformers provide baseline understanding of visual features, which can be adapted to recognize disease patterns with fewer labeled examples. For clinical text, models trained on biomedical literature bring domain knowledge of medical terminology. This approach dramatically reduces the data requirements, training time, and computational costs while improving model performance, making it essential for healthcare AI projects facing sample size limitations or rare disease scenarios.

SMOTE (Synthetic Minority Over-sampling Technique) addresses class imbalance in healthcare datasets by generating synthetic examples of underrepresented conditions or outcomes. In medical contexts where rare diseases or adverse events make up only a small fraction of available data, SMOTE creates new minority-class samples by interpolating between existing examples in feature space. The algorithm identifies k-nearest neighbors for each minority sample, then generates synthetic points along the line segments connecting these neighbors. This balances the dataset without simply duplicating existing records, which would lead to overfitting. SMOTE proves particularly valuable for tabular healthcare data like electronic health records, clinical trial results, and structured patient demographics. However, it must be applied carefully to maintain clinical validity, avoid introducing physiologically impossible combinations, and be combined with expert review to ensure generated samples remain medically meaningful.

Synthetic healthcare data must comply with major privacy regulations including HIPAA in the United States and GDPR in the European Union, even though it contains no direct patient identifiers. Under HIPAA, fully synthetic data that contains zero real patient information may not be considered Protected Health Information, but partially synthetic datasets that blend real and artificial elements still require safeguards. GDPR's privacy-by-design principles apply to synthetic data generation processes, requiring organizations to demonstrate that synthetic records cannot be used to re-identify individuals. Key compliance requirements include conducting privacy impact assessments, implementing technical safeguards like differential privacy, maintaining audit trails of data generation methods, testing resistance to membership inference attacks, and ensuring k-anonymity standards. Healthcare organizations must document their synthetic data generation pipeline, validate that no real patient information leaks through, and establish clear governance policies before deploying synthetic training data in AI development.

The choice between data augmentation and synthetic data generation depends on your dataset size, resources, and specific healthcare AI objectives. Use data augmentation as a first-line strategy when you have at least a moderate baseline of real patient data and need to improve model generalization through controlled variations. Augmentation works best for improving robustness to acquisition differences, lighting conditions, imaging angles, and minor variations in medical images or clinical text. Choose synthetic data generation when facing severe data scarcity, extreme class imbalance with rare conditions, or situations where collecting additional real patient data is impractical, too expensive, or restricted by privacy regulations. Synthetic generation excels at creating entirely new examples of underrepresented diseases, filling gaps in patient demographics, and producing datasets for rare scenarios. The optimal approach typically combines both: apply augmentation to real data for variety, then add synthetic generation to balance rare classes and expand overall dataset size while maintaining clinical validity through rigorous validation.

Synthetic training data carries several important limitations and risks that healthcare AI developers must address. The primary concern is artifact introduction, where generative models create unrealistic patterns, impossible anatomical combinations, or spurious correlations that mislead AI systems during training. Studies show synthetic datasets often fail to preserve complex multi-signal correlations found in real physiological data. Over-reliance on synthetic data can lead to models that perform well in testing but fail in real clinical environments due to distribution shift. Privacy risks remain even with synthetic data, as sophisticated attacks can sometimes extract information about the original training data or re-identify patients through inference. Synthetic data may also perpetuate or amplify existing biases present in the original dataset. Regulatory acceptance remains limited, with agencies requiring real-world validation regardless of synthetic data quality. To mitigate these risks, always combine synthetic with real data, conduct thorough validation across statistical, clinical, and privacy dimensions, maintain expert oversight, and document all generation parameters for reproducibility and regulatory review.

The cost of generating synthetic healthcare data varies significantly based on data type, volume, quality requirements, and chosen methodology. For clinical text generation, using large language model APIs like GPT-4 can range from several hundred to thousands of dollars depending on the number of records needed and prompt complexity. Medical image generation using diffusion models or GANs requires substantial computational resources, with GPU costs potentially reaching thousands of dollars for training generative models on sufficient real data, though pretrained models can reduce this investment. Structured data generation for electronic health records using techniques like CTGAN or SMOTE typically costs less, often feasible on standard computing infrastructure. Additional expenses include expert annotation for validation, privacy audits, statistical testing, and clinical review to ensure synthetic data quality. Commercial synthetic data platforms offer subscription-based pricing, while open-source tools reduce direct costs but require in-house expertise. Overall, synthetic data generation remains more cost-effective than collecting, annotating, and securing equivalent volumes of real patient data, especially for rare conditions or specialized medical scenarios.

Fully synthetic healthcare data contains zero real patient information and is entirely computationally generated based on statistical patterns learned from original datasets, offering maximum privacy protection. Every data point, from demographics to diagnoses to imaging features, is artificially created without direct correspondence to actual patients. This approach minimizes re-identification risks and simplifies regulatory compliance but may sacrifice some realism and clinical utility. Partially synthetic data combines real patient information with artificial elements, typically replacing only sensitive attributes or generating synthetic values for missing data points while preserving authentic clinical relationships. This hybrid approach balances privacy protection with data quality, maintaining more complex correlations and edge cases found in real-world medicine. Partially synthetic datasets often retain greater clinical validity and statistical fidelity but require stronger privacy safeguards, rigorous testing for re-identification risks, and more careful regulatory consideration. The choice depends on privacy requirements, intended use case, and whether the benefits of increased realism outweigh the additional privacy governance needed for partially synthetic approaches.

Ensuring clinical validity of synthetic medical images requires a comprehensive evaluation framework combining expert assessment, quantitative metrics, and real-world testing. Start with blinded clinical review where radiologists or specialists evaluate synthetic images alongside real scans without knowing which is which, assessing anatomical accuracy, realistic pathology presentation, and diagnostic utility. Use Turing-test style experiments where difficulty distinguishing synthetic from real images suggests high quality. Implement quantitative metrics such as Fréchet Inception Distance to measure distribution similarity and Learned Perceptual Image Patch Similarity for human-like perceptual comparison. Verify that synthetic images respect anatomical constraints, such as proper organ positioning, realistic tissue density in CT scans, and appropriate signal characteristics in MRI sequences. Test models trained on synthetic images against real patient data to confirm they maintain diagnostic accuracy, sensitivity, and specificity in clinical settings. Validate across diverse patient populations, multiple acquisition devices, and various imaging protocols. Document any systematic differences or artifacts, and establish clear acceptance criteria before incorporating synthetic images into training pipelines for healthcare AI systems.

Yes, synthetic data proves particularly valuable for rare disease AI model development where limited patient cases create insurmountable data scarcity challenges. Generative models can create artificial examples of rare conditions by learning patterns from the few available cases and generating variations that reflect realistic disease presentations. This addresses severe class imbalance, prevents overfitting to limited samples, and provides sufficient training data for deep learning models that typically require thousands of examples. Techniques like conditional GANs allow targeted generation of specific rare disease presentations, patient demographics, or disease stages that may be underrepresented. SMOTE and similar oversampling methods balance rare disease classes in structured datasets. However, synthetic data for rare diseases requires exceptional rigor in validation, as generative models may hallucinate unrealistic disease features when learning from minimal examples. Expert clinical oversight becomes even more critical to verify that synthetic rare disease cases remain physiologically plausible. Best practice combines synthetic rare disease data with transfer learning from related common conditions, thorough validation against any available real rare disease cases, and testing across external datasets to confirm the model generalizes to actual rare disease patients in clinical practice.

Several specialized tools and open-source libraries facilitate synthetic healthcare data generation across different modalities. For medical imaging, Stable Diffusion and MONAI provide frameworks for diffusion model-based generation, while StyleGAN2-ADA offers photorealistic image synthesis. The Synthetic Data Vault (SDV) library supports tabular healthcare data generation with models like CTGAN optimized for complex medical datasets. For clinical text, fine-tuned versions of GPT-4, Claude, or domain-specific models like BioBERT and ClinicalBERT enable realistic clinical note generation. The Python ecosystem offers implementations of VAEs, GANs, and SMOTE through libraries like TensorFlow, PyTorch, and imbalanced-learn. Synthea generates realistic synthetic patient records and electronic health records with customizable disease progression models. For privacy-preserving generation, libraries like Opacus implement differential privacy, while PyCox handles synthetic survival data. Commercial platforms like Mostly AI, Gretel, and Syntegra offer end-to-end solutions with privacy guarantees and regulatory support. The choice of tool depends on data type, required privacy level, computational resources, and whether you need custom generation logic or ready-made solutions with compliance features built in.

Differential privacy provides mathematical guarantees that synthetic data generation cannot reveal sensitive information about individual patients in the training set. When applied to synthetic data generation, differential privacy adds calibrated random noise during the model training process, ensuring that the presence or absence of any single patient's data has minimal impact on the generated output. DP-GANs (Differentially Private GANs) implement this by clipping gradients during training and adding Gaussian noise proportional to a privacy budget parameter epsilon. Lower epsilon values provide stronger privacy but may reduce synthetic data quality. The privacy budget quantifies the maximum information leakage risk, with typical healthcare applications targeting epsilon values between 0.1 and 10 depending on sensitivity requirements. Differential privacy helps protect against membership inference attacks, where adversaries attempt to determine if specific patients were in the training data. However, strong differential privacy constraints can limit the utility and realism of synthetic data, creating a trade-off between privacy protection and model performance. Healthcare organizations must carefully balance these considerations based on regulatory requirements, use case sensitivity, and acceptable privacy risk tolerance when implementing differentially private synthetic data generation.

Comprehensive documentation of synthetic data generation ensures reproducibility, supports regulatory compliance, and enables quality assurance for healthcare AI systems. Best practices include recording all algorithmic details such as generative model architecture, training hyperparameters, random seeds, and software versions used. Document the original real dataset characteristics, including size, distribution, source, collection methods, and any preprocessing applied. Specify data split strategies showing exactly which real samples were used for training generators versus held out for validation. Maintain detailed logs of generation parameters like number of synthetic samples created, conditioning variables used, rejection criteria, and any post-processing filters applied. Record all validation results including statistical similarity tests, expert review outcomes, model performance metrics, and privacy assessment findings. Preserve complete audit trails showing who generated the data, when, why, and under what authority. Include limitation disclosures identifying known artifacts, failure modes, or scenarios where synthetic data should not be used. Store sample synthetic records alongside documentation for transparency. Implement version control for both generation code and produced datasets. This thorough documentation supports regulatory submissions, enables future audits, facilitates collaboration, and ensures that anyone using the synthetic data understands its provenance, quality, and appropriate applications.

The time required to generate synthetic healthcare data varies dramatically based on data type, volume, model complexity, and available computational resources. For clinical text generation using API-based large language models, producing thousands of synthetic patient notes might take hours to days depending on prompt complexity and rate limits. Medical image generation using diffusion models can take seconds per image for inference but requires days or weeks of GPU training if building custom generative models from scratch. Pretrained models significantly accelerate this process, reducing image generation to minutes or hours for large datasets. Tabular data generation with CTGAN or similar models typically completes in minutes to hours for moderate-sized datasets on standard hardware. Complex, high-resolution 3D medical imaging or whole-slide pathology generation may require days of computation even with powerful GPUs. Additional time must be allocated for validation, including statistical testing, expert clinical review, model evaluation, and privacy assessments, which can add weeks to the timeline. Data preprocessing, model fine-tuning, and iterative refinement based on validation results extend the overall process. For production healthcare AI projects, plan for weeks to months from initial data assessment through validated synthetic dataset deployment, though rapid prototyping with existing tools can produce initial results in days.

.png)